Dietmar Pichler talks about the dangers of Internet 2.0, Donald Trump’s Twitter ban, how to combat disinformation, why deepfakes pose the next level of cyber danger and how to keep democracy alive in a manipulative digital space.

Host: Balázs Csekő

At the speed of light. That is how the World Wide Web has developed since its creation. It was only two decades ago that people feared the Y2K bug. Some even painted horror scenarios on the wall: with the arrival of the year 2000 personal computers would stop working and nuclear weapons could explode. Despite some deliberate attempts of trying to cause panic in the population, scientists were well prepared.

“It was a bit of a hoax”, Mr Pichler said.

In the era of Internet 1.0, two major types of users shared the digital space. While geeks and nerds, persons with computer knowledge, already dominated the scene, most of the people used personal computers only for downloading different content. The rise of Internet 2.0 has completely changed the situation and made the differences between the 1990s and early 2000s clear. “Suddenly everyone became a publisher.”

Today, the online sphere is flooded with user-generated content. Still, there is a big difference between average users and professional journalists. “People do not check the information that they publish,” Mr Pichler stated.

Social media, with tons of information, plays a distinguished role in everyday life. Many users don’t even leave such platforms as they can find ‘theoretically everything’ there. Even big media outlets like CNN and NBC have discovered the advantages of Facebook, Twitter and Co. and publish their content on social networking sites.

Whose responsibility?

A decade ago, during the Arab Spring era, social media was widely seen as a democratization force. This perception has shifted entirely ever since in the eyes of many critics. Social networking sites are often accused of fuelling the spread of fake news and disinformation.

Can Facebook and Twitter take responsibility for what is happening on these platforms? Yes and no. “Yes, in the sense that they are providing the technology, but they are not a publishing house,” Mr Pichler said.

In case someone posts naked pictures or any kind of inappropriate content, it’s definitely not Mark Zuckerberg’s responsibility. “On the other hand, one could say he should monitor his platform,” he added.

“I think users should be responsible for the content. They can decode information, platforms can’t do it for us.“

Banning accounts

The de-platforming of former US President Donald Trump from social media has created major debate on what is allowed on such platforms and how far users can go with their opinion.

Nevertheless, Mr Pichler acknowledges that Donald Trump’s twitter account was ‘full of very problematic tweets’, he doesn’t support the ban. On the other hand, the expert finds it “ridiculous” that Twitter is allowing the account of the Iranian Grand Ayatollah Ali Khamenei to operate. “More dangerous things are happening there. That is hate speech on a dictatorship level,” he claimed.

Smaller channels can also cause serious harm by spreading dangerous information. The removal of these accounts, however, usually doesn’t happen. They need to be under proper surveillance in order to be removed.

How to combat disinformation?

Social media literacy is one of the options to tackle this detrimental phenomenon. The education of it should already start in school, but teachers today have a severe lack of knowledge of social media. “Old people are teaching by the book these days,” Mr Pichler explained.

Critical thinking and an interdisciplinary attempt are always essential for the approach of information. In order to successfully detect disinformation, the answer to the following questions could be of use: What sources can be used to verify the theory? Who sends it out to the world? What is the narrative behind it? “Once the Ayatollah is involved, you can easily imagine what his motives are.”

Help comes already too late for all those who are deep in a ‘parallel world’. Although, the number of such victims is small, “nothing can be done” against this kind of damage anymore. For the expert, persons “in between worlds” pose an even greater danger.

Fake news and deepfake

Because of the complexity, the process of detecting fake news is currently not working well. “You have to be an expert for everything that’s going on in the world.”

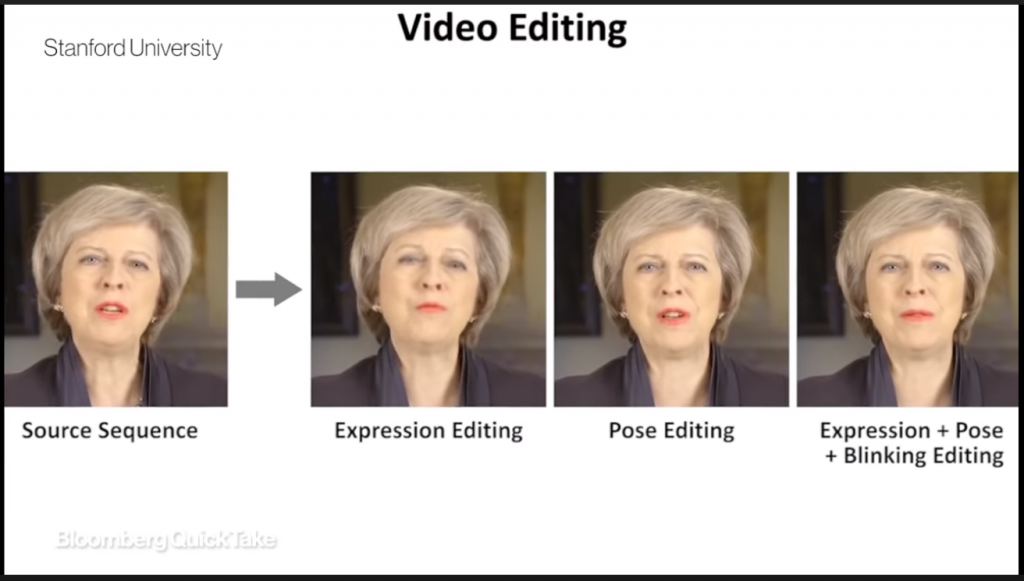

Photo credit: It’s Getting Harder to Spot a Deep Fake Video, Bloomberg Quicktake, YouTube

Algorithms have their capacity limits finding disinformation as well. In case of information like a specific year of an event or a major detail of a movie, it could operate successfully, but they may have a problem handling complex issues. Furthermore, the current algorithms are not designed for jokes. “It will take years until they work well in determining when somebody tells a joke,” Mr Pichler said.

The next generation of cyber danger is knocking on the door and getting better every day thanks to machine learning: deepfake. It is synthetic media, in which a person in an existing image, video or audio is replaced with someone else’s likeness. “If someone records our conversation, they can easily use our voices.”

Technical deficiencies like a low resolution video or the lack of HD images can help the spread of deepfakes. For the moment, technology and good eyes are the solution for this kind of manipulation methods.

Keeping democracy alive

The internet has been a battlefield between democracies and authoritarian regimes for decades and will continue so in an increasing fashion. But how could democratic countries preserve their democracies in a world where manipulation attempts in the digital sphere will not diminish?

“If we are not able to keep freedom of speech and freedom of press, then our democracy is gone,” Mr Pichler stated. “Even if we speak about Donald Trump, we need a society that is able to deal with such kind of populism, with messages and even hate speech,” he added.

The goal should be to create the digital citizens of the future, who are aware of the opportunities and the risks. “We need digital citizens who can distinguish between the truth and things that are completely out of this world.”

Cover photo credit: Left: Real footage of Vladimir Putin. Right: Simulated video using new Deep Video Portraits technology. Gif: H. Kim et al., 2018/Gizmodo

Dietmar Pichler is a social media literacy expert and programmatic director of the Vienna-based Center for Digital Media Competence. He is also the founder and head editor of the European media platform stopovereurope.eu and a board member of Vienna goes Europe, a non-partisan association. Twitter: @DietmarPichler1

Photo credit: Zentrum für digitale Medienkompetenz